Preparing your Organization for AI-Driven Identity Threats

February 5, 2026

|

Duration:

5

min READ

Introduction

As AI agents, models, and automation become more deeply embedded across enterprise environments, organizations must rethink how identity governance, privilege, and access controls apply beyond human users.

This blog breaks down the different types of AI, how AI‑driven threats are evolving, and why treating AI agents as governed, privileged identities is critical to maintaining control, trust, and security.

Understanding AI and Its Security Implications

Q: What is AI?

A: AI, or Artificial Intelligence, refers to the development of computer systems and algorithms that can perform tasks typically associated with human intelligence, such as decision-making, learning, and problem-solving.

AI systems use large amounts of data, statistical models, and machine learning techniques to mimic cognitive functions and automate processes, improving efficiency and enabling new capabilities in fields like cybersecurity, healthcare, finance, and more.

Q: What are the different types of AI?

A: There are four main categories of AI:

- Machine Learning (ML)

- Natural Language Processing (NLP)

- Generative AI (large language models like ChatGPT)

- Computer Vision

Q: What is Machine Learning (ML)

A: Machine Learning enables systems to learn patterns from data and improve task performance without being explicitly programed. Some security capabilities that leverage machine learning include:

- Anomaly detection, such as User and Entity Behavior Analytics (UEBA) and Security Information and Event Management (SIEM)

- Threat intelligence correlation

- Malware classification

- Risk scoring

Q: What is Natural Language Processing (NLP)?

A: Natural Language Processing allows computers to understand, interpret, and generate human language. NLP is part of:

- Phishing detection

- Log analysis

- Security document analysis

- Chatbots for security awareness

Q: What is Generative AI?

A: Generative AI includes Large Language Models (LLMs) like ChatGPT. Generative AI creates new content (text, images, code, etc.) by leveraging patterns from existing data. Generative AI is used for:

- Code generation (and vulnerability introduction risk)

- Social engineering at scale

- Security policy drafting

- Incident response assistance

Q: What is Computer Vision?

A: Computer Vision enables machines to interpret and analyze visual information from image sand videos. It can help with:

- Biometric security (facial, thumbprint recognition)

- Document verification

- Visual threat detection

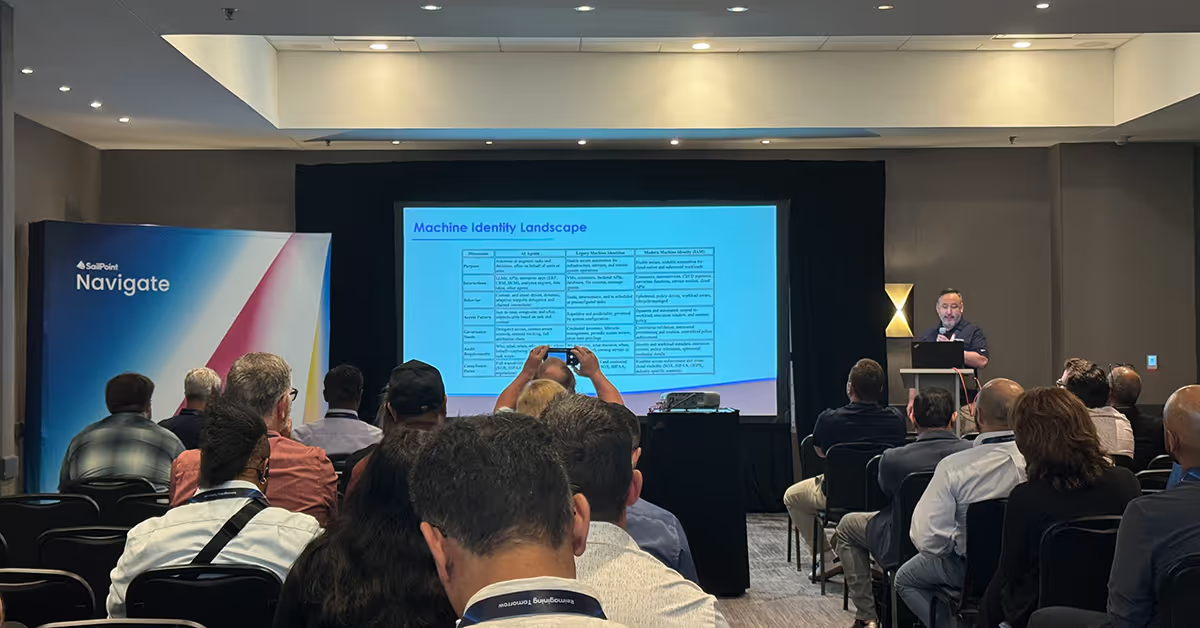

Why AI Changes the Identity Threat Landscape

Q: How accurate is AI?

A: AI is probabilistic rather than deterministic, meaning its outputs rely on statistical confidence instead of guaranteed, repeatable results. Because AI can be wrong and isn’t foolproof, its decisions shouldn’t be treated like hardcoded rules. Human oversight remains essential, especially for critical security decisions.

Q: What are some tips for keeping up with AI’s evolution?

A: The bottom line: AI is a force multiplier. It amplifies capability on both sides, demands updated governance and risk management approaches, and still relies heavily on data quality and human oversight.

To keep up with AI’s evolution, focus on understanding where it meaningfully impacts your business and security posture rather than chasing every new headline. Stay grounded by recognizing how AI already operates on both sides of cybersecurity, powering advanced threats while also strengthening tools like detection, analytics, and response. By tracking real outcomes in your existing technologies instead of marketing hype, you can stay informed without being overwhelmed.

Q: How do I separate AI hype from truly game-changing technology?

A: It’s easy to get lost in the volume of new tools, claims, and innovations surrounding AI. The key to cutting through hype is recognizing that AI is both widely impactful and often misunderstood. Yes, you should be paying attention to how AI affects your business and cybersecurity posture, but you don’t needto buy into every bold promise or headline.

Is it Too Late to Govern AI?

Q: Am I too late to control AI in my organization?

A: No, it’s not too late. AI’s rapid growth and constant attention mean there is a wide range of self-guided resources and professional support available to help you evaluate and harness AI effectively. The key is to stay engaged by embracing the momentum and learning proactively. With AI, the greater risk is in action. Organizations that do not early, risk being left behind.

Governing AI Access, Data, and Privilege

Q: How can I control who (human or AI) is allowed to use which AI tools, models, and data?

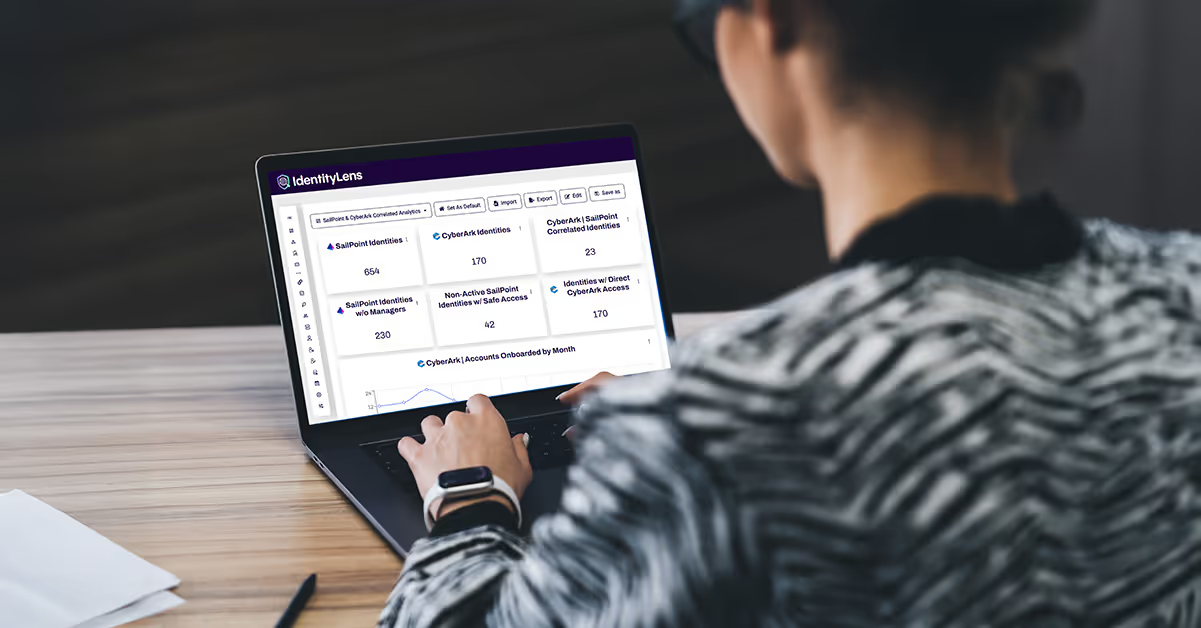

A: Organizations can control AI usage by implementing strong Identity and Access Management (IAM) that defines which users, AI agents, and applications can access specific tools, models, and data. Secure authentication methods such as SSO and MFA should be paired with defined non‑human identities for AI, each tied to a clear owner, role, and approved data access. Role‑based permissions and regular audits help ensure policies are enforced, and accountability is maintained across AI environments.

Q: How do I get visibility and control over what data AI is allowed to access?

A: Organizations must enforce AI data access through strong Identity and Access Management (IAM) controls, including role‑ and attribute‑based access policies tied to data classification and DLP systems. AI models and agents should only access explicitly approved datasets via allow‑lists, with all training, prompts, and tool usage logged for traceability. Treating AI as a managed identity ensures its data access is transparent, auditable, and aligned to business intent.

Q: How do I make sure policies governing AI identities are enforced?

A: AI identities must be formally provisioned, owned, and governed like any other privileged identity, with continuous monitoring and automated policy enforcement. Regular access reviews, detailed activity logging, and compliance reporting provide accountability by linking every AI action back to a responsible human owner. Automated discovery and reporting tools help to continuously validate that AI behavior remains aligned with organizational policies and risk tolerance.

Why AI Must Be Treated as a Managed Identity

Q: Why should AI agents and identities have privileged access to sensitive data and admin level permissions?

A: AI agents often operate autonomously and may require elevated access to perform administrative, analytical, or security‑critical tasks at machine speed. Assigning AI agents explicit privileged identities allows organizations to apply least‑privilege controls, enforce guard rails, and log every action. This makes AI activity intentional, auditable, and accountable rather than implicit and unmanaged.

Q: How do I apply least privileged access and Zero Trust security to AI agents?

A: Use a trusted Privileged Access Management (PAM) platform to ensure AI agents operate under Zero Trust and least privileged conditions. Policies should enforce the following:

- No implicit trust for AI agents or models. Trust must be reviewed and granted explicitly

- Just-in-time (JIT) access for AI tools and actions, only when required

- Segmented permissions for inference, training, and execution

- Strong authentication and step-up access for elevated permissions

- Continuous access reviews and enforced AI security policies

- Minimal standing privileges and clear ownership of every AI agent

Q: Why is it necessary to treat AI agents as managed identities?

A: Building visibility and governance around AI identities is not just a security best practice. It is essential for maintaining control as AI becomes more deeply embedded across your environment. By treating AI agents as managed identities and using the right tools to monitor and audit their activity, organizations can stay ahead of risk while unlocking the benefits of responsible AI adoption.

Preparing for What Comes Next

AI is not a future problem. It is already reshaping how identities behave, how access is granted, and how attackers operate. Organizations that extend identity governance, privilege management, and Zero Trust principles to AI agents will be better positioned to adopt AI responsibly without sacrificing security or control. The path forward is not to slow AI down, but to govern it intentionally.

Authors

No items found.

Identity Governance

Advisory

No items found.

.svg)

.svg)